Robots.txt is one of the most crucial elements of SEO. It is the first thing a crawler checks when it visits your website. It is used to direct a crawler about which parts of the website it is allowed and disallowed to crawl. A small mistake in any directive in this file can lead to poor crawlability, which directly affects website rankings.

In this blog post, we will cover some of the most common mistakes that people make while creating a robots.txt file that you should avoid.

Common Robots.txt Mistakes

-

Not placing the file in the root directory

One of the common mistakes that people make is forgetting to place the file in the correct location. A robots.txt file should always be placed in the root directory of your website. Placing it within other sub-directories makes the file undiscoverable for the crawler when it visits your website.

Incorrect way – https://www.example.com/assets/robots.txt

Correct way – https://www.example.com/robots.txt

-

Improper use of wildcards

Wildcards are special characters used in the directives defined for crawlers in a robots.txt file. There are two wildcards that can be used in a robots file: * and $. The character * is used to represent “all” or “0 or more instances of any valid character”. And the character $ is used to represent the end of a URL. Understand how wildcards work in the below example and use them wisely.

Example of correct implementation

User-Agent: * (Here * is used to represent all types of user agents)

Disallow: /assets* (Here * represents that any URL with “/assets” present in it will be blocked)

Disallow: *.pdf$ (This directive denotes any URL ending with .pdf extension should be blocked)

Do not use wildcards unnecessarily or you might end up blocking an entire folder instead of a single URL.

-

Unnecessary use of trailing slash

Another common mistake is using trailing slash while blocking/allowing a URL in robots.txt. For example, if you wish to block a URL: https://www.example.com/category.

What happens if you add an unnecessary trailing slash?

User-Agent: *

Disallow: /category/

This will indicate to Googlebot to not crawl any URLs inside the “/category/” folder. However, this command will not block the URL example.com/category, as this URL does not have a trailing slash at the end

The ideal way to block the URL

User-Agent: *

Disallow: /category

-

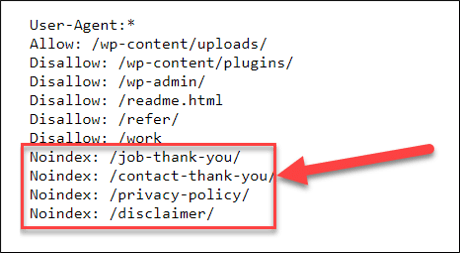

Using the NoIndex directive in robots.txt

This is an old practice that people have now discontinued. Google officially announced that the NoIndex directive wouldn’t work in robots.txt files from September 1, 2019. In case you are using it, you should get rid of it. Instead, you should define a NoIndex attribute in the robots meta tag for the URLs you don’t want indexed by Google.

Example of NoIndex in robots.txt

Use the meta robots tag instead

<meta name=”robots” content=”noindex”/>

Use this code snippet in the page code of the URLs you want to block Google from indexing rather than using a NoIndex directive in robots.txt file.

-

Not mentioning the sitemap URL

People often forget to mention the sitemap location in the robots.txt file, which is not desirable. Specifying the sitemap location will help the crawler discover the sitemap from the robots file itself. Googlebot won’t have to spend time finding the sitemap as it has been mentioned upfront. Making things easier for crawlers will always help your website.

How to define sitemap location in robots file?

Simply use the command mentioned below in your robots.txt file to declare your sitemap.

Sitemap: https://www.example.com/sitemap.xml

-

Blocking CSS and JS

People often think that CSS and JS files may get indexed by Googlebot and hence end up blocking them in robots.txt. Google’s John Mueller has himself advised not to block JS and CSS files as Googlebot needs to crawl them for rendering the page efficiently. If Googlebot is unable to render pages, it is most likely that it won’t index or rank those pages. You can read more about Mueller’s advice here.

-

Not creating a dedicated robots.txt file for each sub-domain

It is suggested that each and every sub-domain of a website, including the staging sub-domains, should have a dedicated robots.txt file. Not doing so can lead to crawling and indexing of unwanted sub-domains (for example staging, APIs, and so on) and inefficient crawling of important sub-domains. Hence it is highly recommended to ensure that a robots.txt file is defined and customized for each sub-domain.

-

Not blocking crawlers from accessing the staging site

All development efforts for a website are first tested on a staging or test website and then deployed on the main website. But one important thing people forget is that for Googlebot, a staging website is just like any other website. It can discover, crawl and index your staging website just like any other normal website. And if you don’t block the crawlers from crawling your staging site, there is a high chance that your staging URLs will get indexed and might even rank for some queries. This is the last thing you want.

People often use the same robots.txt file from their main website on the staging website, which is completely wrong. Always block crawlers from crawling your staging site. You can do it by simply following these commands:

User-Agent: *

Disallow: /

-

Ignoring case sensitivity

It is important to remember that URLs are case sensitive for crawlers. For example, https://www.example.com/category and https://www.example.com/Category are two different URLs for a crawler. Hence, when defining directives in robots.txt file, ensure that you are maintaining case sensitivity for URLs.

Let’s say you want to block the URL https://www.example.com/news

Incorrect approach

User-Agent: *

Disallow: /News

Correct approach

User-Agent: *

Disallow: /news

Conclusion

These were some of the most common mistakes related to robots.txt file that can drastically harm SEO. Robots.txt is a small, yet very important file that is easy to set up. Hence, you should take the utmost care when setting up a robots.txt file and refrain from making any errors.

Have you made any of these errors? What was the impact? Let us know in the comments section below.

Popular Searches

How useful was this post?

5 / 5. 1

3 thoughts on “What Are the Most Common Robots.txt Mistakes & How to Avoid Them?”

Very precise and to the point. Nicely explained. Thank you for sharing

Thank you for sharing your feedback. Check out our latest posts for more updates.

Very simplified and easy to understand. thank u for sharing