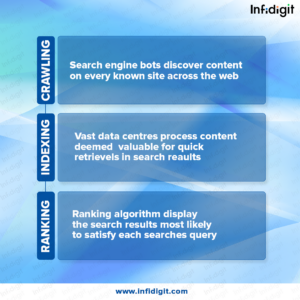

SEO (Search Engine Optimisation) is the practice of building the quality and quantity of traffic on your website. It is the process of optimising the web pages to organically achieve higher search rankings. Do you ever wonder what makes a search engine go around? It is fascinating how some mechanisms can systematically browse the World Wide Web for web indexing or web crawling.

In the ever-increasing SEO trends, let’s have a closer look at the primary function of Crawling & Indexing in delivering the search results

Crawling

Crawling is the process performed by the search engines where it uses their web crawlers to perceive any new links, any new website or landing pages, any changes to current data, broken links, and many more. The web crawlers are also known as ‘spiders’, ‘bots’ or ‘spider’. When the bots visit the website, they follow the Internal links through which they can crawl other pages of the site as well. Hence, creating the sitemap is one of the significant reasons to make it easier for the Google Bot to crawl the website. The sitemap contains a vital list of URLs.

(E.g., https://www.infidigit.com/sitemap_index.xml)

Whenever the bot crawls the website or the webpages, it goes through the DOM Model (Document Object Model). This DOM represents the logical tree structure of the website.

DOM is the rendered HTML & Javascript code of the page. Crawling the entire website at once is nearly impossible and would take a lot of time. Due to which the Google Bot crawls only the critical parts of the site, and are comparatively significant to measure individual statistics that could also help in ranking those websites.

Optimise Website For Google Crawler

Sometimes we come across specific scenarios wherein Google Crawler is not crawling various essential pages of the website. Hence, it is crucial for us to tell the search engine how to crawl the site. To do this, create and place robots.txt file in the root directory of the domain. (E.g., https://www.infidigit.com/robots.txt).

Robots.txt file helps the crawler to crawl the website systematically. Robots.txt file helps crawlers to understand which links are supposed to be crawled. If the bot doesn’t find the robots.txt file, it would eventually move ahead with its crawling process. It also helps in maintaining the Crawl Budget of the website.

Elements affecting the Crawling

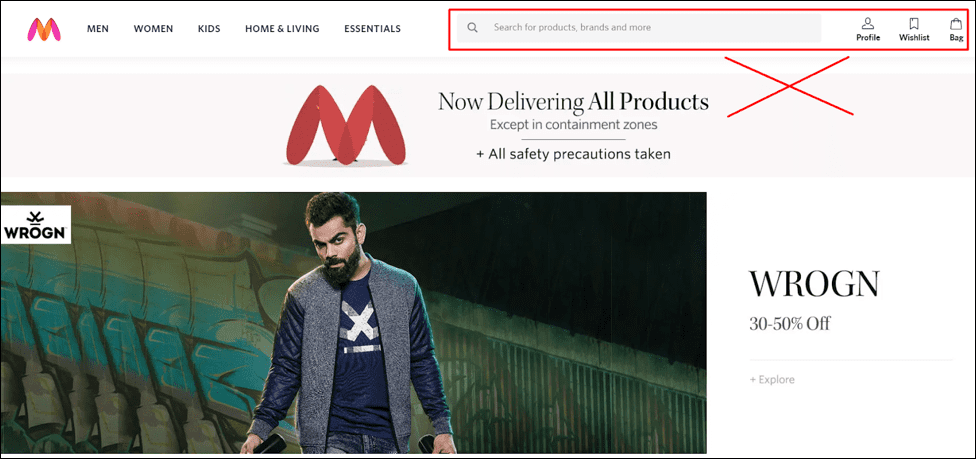

- A bot does not crawl the content behind the login forms, or if any page requires users to log in, as the login pages are secured pages.

- The Googlebot doesn’t crawl the search box information present on the site. Especially in ecommerce websites, many people think that when a user enters the product of their choice in the search box, they get crawled by the Google bot.

- There is no assurance that bot would crawl media forms like images, audios, videos, text, etc. Recommendations for the best practice is to add the text(as image name) in the <HTML> code.

- Manifestation of the websites for particular visitors( for example Pages shown to the bot are different from Users) is cloaking to the Search Engine Bots.

- At times search engine crawlers detect the link to enter your website from other websites present on the internet. Similarly, the crawler also needs the links on your site to navigate various other landing pages. Pages without any internal links assigned are known as ‘Orphan pages’ since crawlers do not discover any path to visit those pages. And, they are next to invisible to the bot while crawling the website.

- Search Engine crawlers get frustrated and leave the page when they hit the ‘Crawl errors’ on the website—crawl errors like 404, 500, and many more. The recommendation is to either redirect the web pages temporarily by performing ‘302 – redirect’ or 301 – permanent redirect’. Placing the bridge for search engine crawlers is essential.

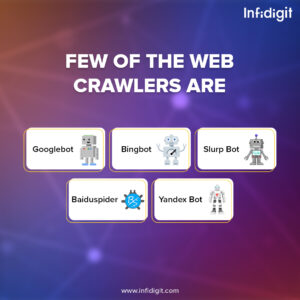

Few of the Web Crawlers are

-

Googlebot

Googlebot is Google’s web crawler (a spider or the robots) that is designed to crawl and index the websites. With no judgement, it only retrieves the searchable content present on the websites. The name refers to two separate types of web crawlers: one for desktop and another for mobile.

-

Bingbot

Bingbot is a type of internet bot deployed in October 2010 by Microsoft. It acts similar to Googlebot, collecting the document from the website to build searchable content on the SERPs.

-

Slurp Bot

Slurp bot produces the results for the Yahoo web crawler. It collects data from the partner’s website and personalises the content for the Yahoo search engine. These crawled pages confirm the authentication across the web pages for the users.

-

Baiduspider

Baidu’s spider is the robot for the Chinese search engine. The bot is a piece of code which, like every crawler collects data relevant to the user query. Gradually, it crawls and indexes the web pages on the internet.

-

Yandex Bot

Yandex is the search engine used in Russia and is the crawler for a search engine of the same name. Likewise, the Yandex bot continuously crawls the website and stores the relevant data in the database. It helps in producing relatable search results to the users. Yandex is the 5th largest search engine globally and holds 60% of the market share in Russia.

Now let’s move ahead to understand how Google indexes the pages.

Indexing

‘Index’ is the compilation of all the information or the pages crawled by the search engine crawler. Indexing is the process of storing this gathered information in the search index database. Indexed data then compares the previously stored data with SEO algorithm metrics compared to similar pages. Indexing is most vital as it helps in ranking the website.

How can you know what Google has indexed?

Type “site:your domain” in the search box to check how many pages are indexed on the SERP. This would show all the pages Google has indexed including pages, posts, images and many more.

The best way to make the URLs being indexed is to submit the sitemap in the Google Search Console, where all the important pages are listed in the sitemap.

Website indexing plays an essential role while displaying all the vital pages on the SERP. Suppose any content is not visible to the Googlebot, it won’t be indexable. Googlebot sees the complete website into different formats like HTML, CSS & Javascript format. The components which are not accessible will not be indexed.

How does Google decide what to index?

When a user types the query, Google attempts to obtain the most relevant answer from its crawled pages in the database. Google indexes the content according to their defined algorithms. It usually indexes the fresh content on the website, which Google believes will improve the user experience. The better the quality of the content, quality of links on the website is, the better it is for SEO.

Identifying how our websites make it to the indexing processes.

-

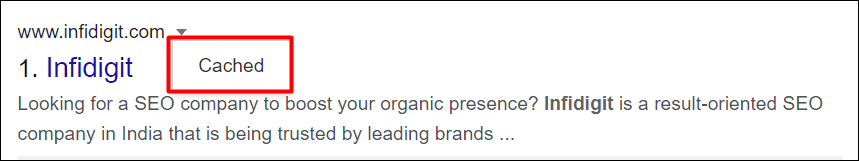

Cached version

Google often crawls the web pages. To check the cached version of the website, click on the ‘drop-down’ symbol beside the URL (as shown in the screenshot below). Another method involves writing ‘cache:https://www.infidigit.com’.

-

URLs eliminated

YES! Web pages can be removed after being indexed on SERP. Removed web pages could be returning 404 errors, it can be redirected URLs, could contain broken links, and many more. Also, the URLs would have a ‘noindex’ tag.

-

Meta tags

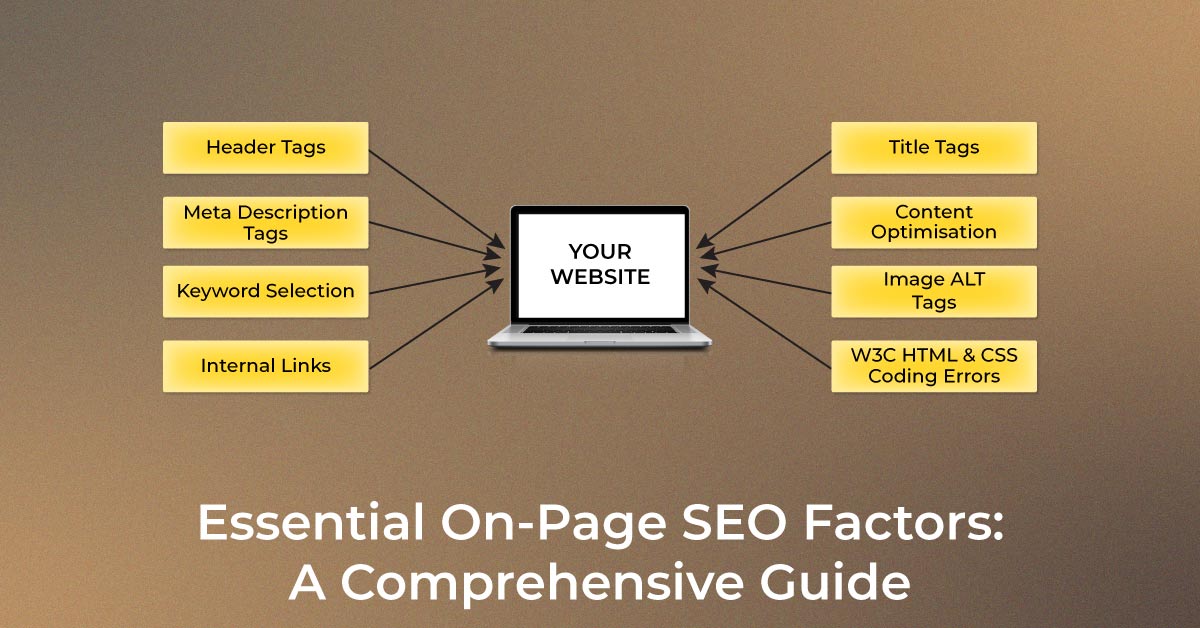

Placed in the <head> section of the HTML code of the site.

- Index, noindex – This function tells the search engine crawler whether the pages to be indexed or not. Default, the bot considers it as an ‘index’ function. Whereas, when you choose ‘noindex’, you are telling crawlers to isolate the pages from the SERP.

- Follow/nofollow – Helps the search engine crawler to decide which page should be monitored and pass the link equity.

Here’s the sample code

<head><meta name=”robots” content=”noindex, nofollow” /></head>

After knowing all the essential information, optimise your website with the advanced SEO offered by the best SEO agency in India. Join us in the comments below.

How do crawling and indexing differ from one another?

| Crawling | Indexing |

| Crawling in digital marketing means ‘following your links.’ | Indexing is simply the search engine adding web pages into Google Search. |

| It is the process through which search engines like Google index web pages. Google indexes pages only after it crawls through them. | It is the process through which Crawlers save or index these web pages and build a website library and analyze information and mark it as relevant for indexing. |

| Google’s Spiders or Crawlers perform the task of visiting your website to track the links. | Once Google Crawlers are done with their job, the results are added to Google’s index. Indexing follows crawling. |

| It follows the links or crawls the web pages. | It involves analyzing the page content and storing it in the index. |

| Search engine bots are responsible for discovering publicly available web pages. | Once crawling is complete, search engine bots save a copy of all the information on index servers. Search engines then procure the relevant results from this information whenever a user searches for a query. |

| Crawling is more resource-intensive than indexing | Indexing is more resource-efficient as it uses the information collected during the crawling process. |

How to look for crawling and indexing issues

-

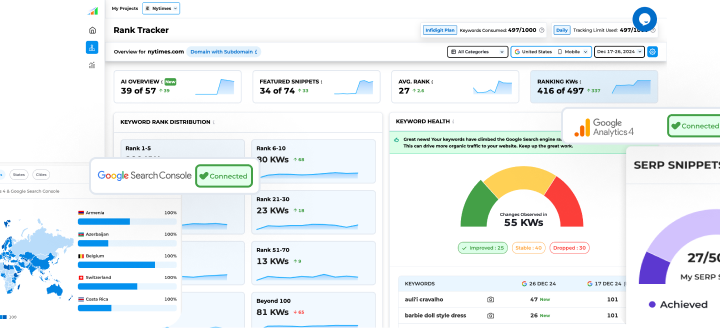

Use Google Search Console

The Page Indexing Report on the Google Search Console contains the crawling and indexing status of all the URLs concerning your website/s known to Google. The summary page provides a graphical representation and count of your indexed and non-indexed pages. The report also contains the issues that prevented your URLs from being indexed and the URLs affected by a particular issue. In addition, it provides a historical overview of your site in relation to these identified issues.

The Improve page experience table shows issues that did not affect indexing but can be fixed to improve Google’s understanding of your pages and site structure.

-

Employ a web crawler

When any web crawler visits a website, they check for the presence of robots.txt files and the instructions the website has for them. After the crawler reads the command, it starts crawling your website as per the instructions.

In the absence of instructions, the Google bot might visit every URL it can find, including the ones you don’t want to index. Web crawlers collect information like the copy and meta tags about each page and store it so the Google algorithm can later fetch them and use it to build and rank search results.

-

Server Log Analysis

If you know what is crawling in digital marketing, you must know about the role server log file analysis plays in SEO. When SEO professionals perform Server log analysis, they can better understand how crawlers are crawling and indexing their websites.

Log server files contain information not available elsewhere and are the only mediums for anyone to see how Google behaves on its site, the kind of content being crawled and how often, and other information related to search engine behavior on your website. This information can be useful for optimization and data-driven decisions.

Popular Searches

How useful was this post?

0 / 5. 0

14 thoughts on “Crawling and Indexing: How Search Engines Discover Your Site”

Even after you stop your SEO work, your website can still rank high on your chosen keywords, though you’re better off continuing with the services of an SEO consultant or in-house team, or you’ll risk losing your search ranking.

Thanks. SEO is a continuous process that has to be done on a daily basis. Search Engines make changes to their algorithms frequently, which affects your search rankings.

Crawling is the process by which search engines discover updated content on the web, such as new sites or pages, changes to existing sites, and dead links.

Thanks. Read our latest posts for more insights.

Nice one. Thanks for sharing.

Thanks Prerna. Do subscribe us for more latest updates.

Very Informative post. Thanks for sharing your knowledge with us.

Thanks. Check out our latest posts for more updates.

If you want your website to rank higher then you will need to have less competitive keywords on site. And i love this your content it is amazing and helpful.

Thank you for sharing your feedback Akpotohor. Check out our latest posts for more updates.

thank you for sharing very great information. it is more valuable information for me and very clearly explained by you.

Thank you for sharing your feedback. Do subscribe us for more latest updates.

Hi

I’m a big fan of your Blog.

Love your Awesome Blog! I would love to share it with my friends.

We are glad that you liked our post. Check out our latest posts for more updates.