What is the crawl budget?

Crawl Budget is the number of URLs on a website which are crawled by search engine crawlers and indexed over a given time period.

Google allocates a crawl budget for each website. By looking at the crawl budget, google bot decides the frequency of crawling the number of pages.

Why is the Crawl Budget limited?

Crawl budget is limited to make sure the website should not receive too many requests by crawlers for accessing server resources which could majorly affect the user experience and site performance.

Unleash your website's potential by harnessing Infidigit's 400+ SEO audit to achieve peak site health & dominance on Google organic search.

Looking for an extensive

SEO Audit for your website?

Unleash your website's potential by harnessing Infidigit's 400+ SEO audit to achieve peak site health & dominance on Google organic search.

How To determine Crawl Budget

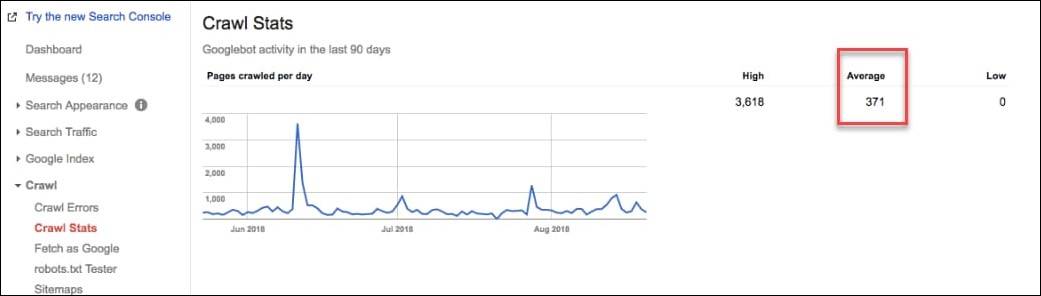

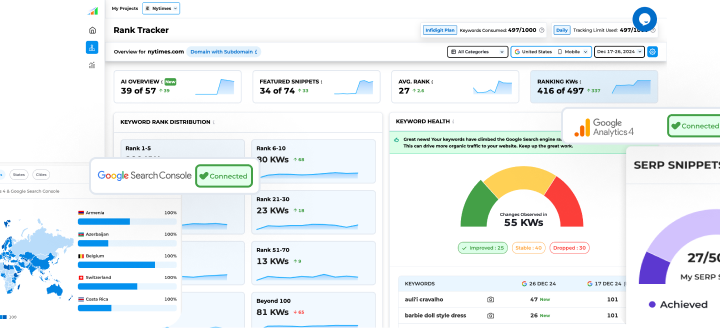

To determine crawl budget for a website, check the crawl stats section available in the Search Console account.

In the above-illustrated report, we can see the average number of pages crawled per day. Here, the monthly crawled budget is 371*30= 11,130

Although this number can fluctuate, it provides an estimated number of pages one can expect to get crawled in a given period.

If you require more details about search engine bots, you can analyse the server log file to know how websites are getting crawled and indexed.

How to Optimise Crawl Budget

To get visibility of a web page on google results, crawling is essential for indexation. Check out these seven action list that can help in optimising the crawl budget for SEO

-

Allow the crawling of important pages in Robot.txt File

To make sure relevant pages and content are crawlable, those pages should not be blocked from the robot.txt file. For utilising crawl budget, block Google from crawling unnecessary user login pages, certain files or administrative sections of the website. Leveraging Robot.txt file by disallowing those files and folders from getting indexed. This is the best way to freeze the crawl budget for large websites.

-

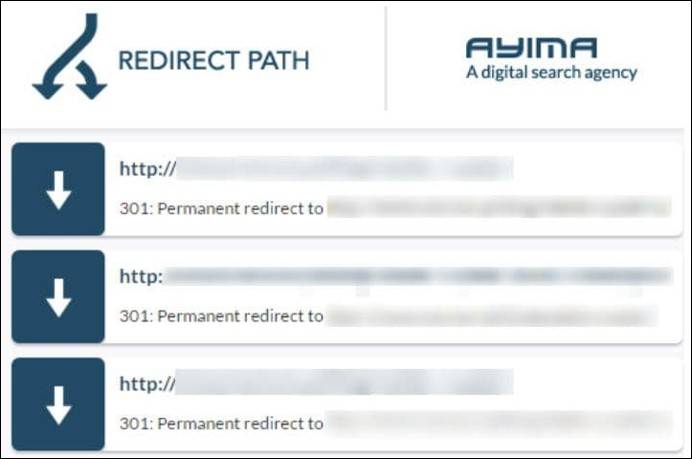

Avoid long redirect chains

If there are more 301-302 redirects present on a website, the search engine crawler stops crawling at some point, without indexing the important pages. Due to a lot of redirects, there is a waste of the crawl budget.

Ideally, the redirects should be fully avoided. However, it is impossible for huge websites to have no redirects chain. The best way is to make sure to have not more than one redirect, only when it is necessary.

-

Managing URL Parameters

Endless combinations of URL parameters create duplicate URL variation out of the same content. Therefore crawling redundant URL parameter drains crawl budget, causing server load and reducing the possibility to index SEO relevant pages.

To save the crawl budget, it is suggested to inform Google by adding URL parameter variations into Google Search Console account under legacy tools and report section > URL Parameters.

-

Improve Site Speed

Improving site speed increases the possibility of getting more pages crawled by the Google bot. Google has stated that a high-speed site improves user experience, increases the crawling rate.

In simple words, a slow website eats up a valuable crawl budget. But if an effort is made to improve the site speed and implement advanced SEO techniques on websites, pages will load quickly then google bots have enough time to crawl and visit more pages.

For optimising page speed of Ecommerce websites, most top Ecommerce SEO services have been striving to build the concrete SEO strategy to drive more traffic and improve user experience to reduce the bounce rate.

-

Updating Sitemap

XML Sitemap should contain only the most important pages so that google bots visit and crawl the web pages more frequently. So, it is necessary to keep the sitemap updated, without redirects and errors.

-

Fixing Http Errors

Technically, broken links and server errors eat up crawl budget. So please take a moment and check your website containing 404 and 503 errors and fix them as soon as possible.

To do this, you can check the coverage report in the search console to know if Google has detected any 404 errors. Downloading the entire list of URL then analysing 404 pages can be redirected to any similar or equivalent pages. If yes, then redirect the broken 404 pages to a new one.

In this case, it is recommended to use screaming frog and SE ranking. Both are effective website audit tools for covering technical SEO checklist points.

-

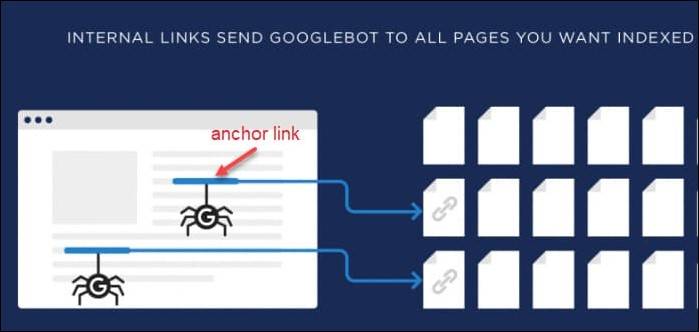

Internal Linking

Google Bots always prioritise crawling URLs which have more number of internal links pointing to them. Internal links allow Google bots to find different types of pages present on the site that are required to index to gain visibility in Google SERPs. Internal Links is one of the important SEO Trends 2020. This helps Google to understand the architecture of a website to explore and navigate through the site smoothly.

Wrapping Up

Optimising a website’s crawling and indexing is the same as optimising the website. Companies that offer SEO services in India always determine the significance of crawl budget while doing SEO audit services.

If the site is well maintained, or the site is relatively small, then there is no need to worry about the crawl budget. But in some cases like larger websites, newly added pages and a lot of redirects and errors, it is needed to pay attention to how to utilise most of the crawl budget effectively.

For analysing crawl stats reports, monitoring the crawl rate on a timely basis can help in examining whether there is a sudden spike or fall in crawl rate.

Popular Searches

How useful was this post?

0 / 5. 0

14 thoughts on “7 Tips On How To Optimise Crawl Budget for SEO”

Great post. Thanks for sharing…

Thanks. Read our latest posts for more updates.

Nice Blog Post.

Thanks. Check out our latest posts for more updates.

Great applause for Your SEO team who always inspires us with innovative posts.

Thanks. Read our latest posts for more updates.

Google has a really hard time finding orphan pages. So if you want to get the most out of your crawl budget, make sure that there’s at least one internal or external link pointing to every page on your site.

Thank you for sharing your feedback. Check out our latest posts for more updates.

WoW, such an amazing post.

Thanks. Please read our latest posts for more updates.

Thanks for sharing this useful post. It’s really helpful for me.

Thanks, Ronnie. Glad to know you liked our post.

Great post, thanks for sharing…

Appreciated. Please check out our latest blogs for more updates.