A recent report highlighted that over 42% of online traffic comes from non-human sources. Furthermore, all of this traffic comprises various programs ranging from legitimate crawlers and bots to corrupt automated software and programs. So, what exactly does trafficbots or bot traffic entail? Let’s find out.

What is Bot Traffic?

Bots are specifically designed to perform automated tasks on the Internet without human intervention. These tasks can include sending reminders, scheduling interviews, etc.

Bots are useful for carrying out repetitive and mundane tasks and can even mimic the behavior of a real user. Bots are used to conduct automated tasks on a large scale, given their efficiency and swiftness.

Any non-human traffic witnessed by a website or an app is bot traffic. Encountering website traffic bots is quite normal on the Internet. Nearly 30% of the internet traffic is made up of website bot traffic.

But is bot traffic good or bad?

Bad bots can harm your website by trying to force their way and stealing sensitive information from your website. However, not all bot traffic is bad. There are some good and legitimate bots, too, with a set purpose and intention of their creators behind them.

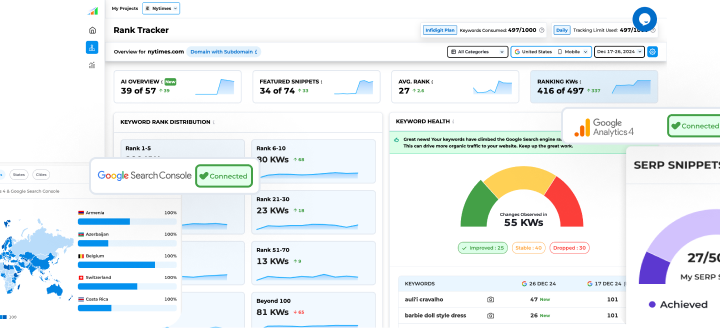

Unleash your website's potential by harnessing Infidigit's 400+ SEO audit to achieve peak site health & dominance on Google organic search.

Looking for an extensive

SEO Audit for your website?

Unleash your website's potential by harnessing Infidigit's 400+ SEO audit to achieve peak site health & dominance on Google organic search.

Types of Bot Traffic

-

Good bots

The most popular website traffic bots in the good category are search engine crawlers or those meant for operating digital services like personal assistants. This bot traffic doesn’t harm the website; the bots announce themselves and what they intend to do on your website.

Good bots can be site-monitoring bots, Feed/aggregator bots, and SEO crawlers.

- SEO tools like Ahrefs send out SEO crawlers to gather information.

- Site-monitoring bots collect data on a website’s uptime and related metrics.

- Feed bots combine newsworthy content with being delivered via email.

-

Commercial bots

These are website traffic bots that commercial companies send to gather information by crawling the web. Some such bots include bots that research companies send to monitor market news, ad network bots sent to monitor display ads, etc.

-

Bad bots

Bad bots do not follow any rules for robots.txt files and try to hide their source and identity to pass off as human traffic. The main reason where bad bots differ from good bots is that they have bad intent. They are tasked with malicious purposes to disrupt or destroy tasks on a website. If left unchecked, these bots can cause permanent damage to websites. Some of the most common types of bad bots are spam bots, credential stuffing bots, web data scraping bots, DoS bots, Ad fraud bots, and gift card fraud bots.

Why Bot Traffic Matters to You?

-

Security and Performance of your Website

If you understand well what bot traffic is, you will also know how these bots help improve your website’s security and performance. Bad or malicious bot traffic can cause your website to crash, strain, or overload your web server. A DDoS attack is an apt example where incessant requests from these website traffic bots take up the server bandwidth slowing down your website or making it inaccessible to users.

Such attacks may cause a loss of traffic, thus ensuing sales on your e-commerce website. The bot traffic is often disguised as human traffic and isn’t visible in website statistics. While there may be random spikes in the traffic on your website, none of the traffic leads to conversions.

These bots can also pose risks to the security of your website. In case there are some vulnerabilities or weak entry points, these bots may try to exploit them, install viruses, and spread those among the users. E-commerce websites and online stores contain sensitive user information like credit card details which these bots can steal if they brute force their way into the website.

-

For the Sake of the Environment

A website traffic bot can also harm the environment. How?

Whenever a bot sends an HTTP request to your server asking for information, your server needs to respond and return the necessary information. This causes a small amount of energy to be spent. Multiply this amount by the times these infinite bots crawling the Internet make such requests. That’s a massive waste of energy!

Though the website traffic bot may be good, it still wastes energy, which is equivalent to a bad bot. This makes search engines guilty of being energy-intensive, despite being an essential part of the Internet. The number of times these crawlers and bots visit your website will shock you. And at times, these bots are unable to pick up the correct information.

Google bots may visit a website several thousand times a day to note the changes, and they may not be the only bots visiting your site. Bots from digital services, other search engines, and based bots may visit your website daily, causing unnecessary strain on the already limited non-renewables.

How is Bot Traffic Identified?

Managing bot traffic is no easy deed, and identifying it is the most important element to correctly assessing your site analytics. Here are some things to look out for which can help you identify bot traffic:

-

- Sudden increase in bounce rates and traffic – Both of these things happening at the same time is a tell-tale indication of bad bot traffic on your website. This can mean that either many bad bots are visiting your site, or one bad bot is repeatedly visiting your site.

- Sudden decrease in page loading speed – If you have not updated your website or made any big changes and your page loading speed sees a dramatic fall, it is a sign that your website is being flooded with bad bots. However, you should also take a look at some other KPIs of your website, as there might also be some other technical on-page issues that might cause this.

- Dramatic decrease in bounce rates – If your bounce rate suddenly dips very low, it is a strong indicator that bad bots like web scraping bots are flooding your website and stealing content. This usually happens when these bots are scanning a vast number of webpages on your site.

Keep a close eye on the KPIs in Google Analytics, and you can conveniently identify the aforementioned abnormalities to identify bot traffic on your website.

How can Analytics be Harmed by Bot Traffic?

Unauthorized traffic generated from bots can severely impact the metrics in analytics such as bounce rates, conversions, page views, geolocation of users, session durations, and more. This impact can make it difficult for site owners to correctly measure their website’s performance. It can also impact various analytics activities like A/B testing, on-page SEO improvements, and conversion rate optimization. The statistical noise and interference in data can cripple these activities, and make it hard for site owners to efficiently improve their website’s functionality.

How to Filter Bot Traffic from Google Analytics?

Google Analytics provides some options to help filter bot traffic. For example, selecting the “exclude all hits from known bots and spiders” feature will exclude the views from bots in the analytics reports. And if you can identify the source of bit traffic, you can provide a list of IPs to Google Analytics to ignore for site visits. While this won’t stop bots from coming to your website, it will help you in filtering out bot traffic and checking real organic traffic on your website.

How Can Bot Traffic Impact Performance?

One of the most common ways for hackers and attackers to launch DDoS attacks on websites is to send a huge amount of bot traffic. A huge amount of bot traffic can disrupt the origin servers to make them overload, which can significantly slow the website down or even make it inaccessible for legitimate users.

How Can Websites Manage Bot Traffic?

Here are a few ways in which websites can manage bot traffic:

- Include a robots.txt file for providing instructions to bots crawling the page

- Use a rate-limiting solution

- Add a list of identified bad bot sources or IPs to block them from visiting the website with filtering tools.

- Deploy a bot management solution for smart management of bot traffic

Manage Bot Traffic on Your Website Efficiently

While bot traffic has its perks, it is important for websites to identify the good from the bad. There are many approaches you can use to mitigate and control bot traffic on your website. But investing in a smart and certified bot management solution is the most effective way to manage bot traffic and mitigate risks on your website.

How to Combat “Bad” Bots

Bad bots can hamper your website’s health, so how should you detect and block such website bot traffic from entering your website? The most straightforward way is to block the individual or the entire list of IP addresses from which the bots are emanating or from which you witness lots of irregular incoming traffic. This automatically helps you to save the server bandwidth and reduce strain. However, the approach can get cumbersome and time-consuming.

You can also use software like a bot management solution. Providers such as Cloudflare have an extensive database of these good and bad bots. The vendor companies also employ AI and machine learning to detect and block such bots.

Another way to tackle the bot traffic problem is to install a security plugin like Sucuri, Security, Wordfence, etc., in case you are running a WordPress website. These companies have security researchers monitoring issues and patching them up at the same time. They let you block unusual traffic or learn how to deal with them.

What can you do for the “good” bots?

Good bots may not harm your website and are also essential, but they consume a lot of energy. And at times, they might not specifically help your website in any way. Even good bots can be a disadvantage to your website, increasing the server load unnecessarily and promoting wasteful consumption.

So, what can you do for these godo traffic bots?

-

If They are not Useful, Block Them

It is necessary to assess if these good website traffic bots benefit your site in any way. And if their crawling is worth more than the cost incurred by your servers, their servers, and the extent of environmental caused.

You know, other search engines besides Google send their website traffic bots to websites to crawl. Suppose a search engine traffic bot other than Google has visited your site around a thousand times in a single day.

What if the crawling brought you only ten visitors? Is the ratio of the number of visitors to the number of times your website was crawled of any use? If not, consider blocking the IP address from where the bot traffic is emerging.

-

Control the Bot’s Crawl Rate

The website traffic bots visit your website several hundred times a day to pick up the changes you made to your website. Not even a large website would have changed its website hundred times in a single day, and honestly, the crawling efforts of the bots are wasteful in such cases.

Try limiting the crawl rate if the bots support the crawl delay in robots.txt. Fiddle with the crawl rate and note the impact on your website. Keep increasing the delay until the change has no significant negative consequences on your website.

Or else, assign a particular delay rate for crawlers from multiple sources visiting your website. It is worth noting that crawl-delay won’t be effective in curbing Google traffic bots, and the search engine bots don’t support it.

-

Improve Their Ability to Crawl

As discussed, not all crawlers are good and useful, and not all kinds of crawling are efficient. There might be several parts of your website, such as the internal search results for your website, which website traffic bots don’t need to visit. Try blocking thor access using robots.txt to save energy and also optimize the crawl budget for your website.

Instead of blocking access, you can also help the crawlers move efficiently on your site by removing unnecessary plugins and links created by CMS. WordPress automatically forms an RSS feed for the comments each time you publish something.

If you don’t have enough comments on that content piece, the link hardly gets any visitors and has no utility. By managing the access, you can prevent crawlers from visiting such links repeatedly and wasting precious energy resources.

Conclusion

SEO tools and applications like Yoast have developed many useful and sustainable settings like crawl optimization to prevent website traffic bots from eating into the server load. You can use such settings to remove or turn off links or ad-ons WordPress automatically adds to the site.

Also, consider looking at the crawl settings to clean up your site regularly and avoid unnecessary overhead costs. Regularly clean the internal site search for your website to prevent any SEO attacks. By optimizing your crawl settings, you not only reduce your server load and improve site health, but you also become an environment warrior protecting crucial resources and reducing carbon emissions from such wasteful consumption.

Popular Searches

How useful was this post?

5 / 5. 1