While most SEO experts know that robots.txt and Meta Robots tag are used to control the access of search engine bots to a website, most of them also do not know to use them efficiently. Both work differently, and it’s crucial to find the balance between which one to use and when. To solve this problem, in this guide, we lay out the best practices for setting up robots.txt file and Meta Robots tags.

Robots.txt

Robots.txt is a file that instructs search engine robots on which areas of the website it is allowed and disallowed to crawl. It is a part of the Robots Exclusion Protocol (REP), which is a group of standards that regulate how robots can crawl and index content on the Web. It may sound quite complex and technical, but it’s very easy to set up a robots.txt file. Let’s get started!

A simple robots.txt file will look something like this –

User-agent: *

Allow: /

Disallow: /thank-you

Sitemap: https://www.example.com/sitemap.xml

Allow and Disallow are the most important commands used in a robots.txt file to guide robots. Let us understand what they mean.

Syntax

User-agent – Specifies the user agent name for which the directives are meant.

* indicates that the directives are meant for all crawlers

Other values for this field can be Googlebot, yandexbot, bingbot, and so on.

Allow – this command indicates to Googlebot that it can crawl the mentioned Uniform Resource Locators (URLs)

Disallow – this command blocks Googlebot from crawling the specified URL(s).

Sitemap: This command is used to specify the Sitemap URL of your website

In the example, User-agent: * means the set of commands that are mentioned are applicable to ALL types of bots. Allow: / denotes that crawlers can crawl the entire website except the ones disallowed in the file. Lastly, Disallow: /thank-you instructs Googlebot that it should not crawl any URL with /thank-you in it. The User-agent, Allow and Disallow commands perform the basic function of a robots.txt file i.e. allowing and blocking of mentioned crawlers.

For More Resources :

Best Robots.txt Practices

Here are some pro-SEO tips that you should follow when setting up your own robots.txt file.

-

-

- First and foremost, please research and understand which areas of the website you don’t want to be crawled. Do not simply copy or reuse someone else’s robots.txt file.

- Always place your robots.txt file in the root directory of your website so that search engine crawlers can easily find it.

- Do not name your file anything apart from “robots.txt” since it is case-sensitive.

- Always specify your sitemap URL in robots.txt as it helps search engine bots to find your website pages more easily.

- Do not hide private information or future event pages in robots.txt. Because it is a public file, any user can access your robots.txt file by simply adding /robots.txt after your domain name. Anyone can see which pages you want to hide; hence it is suggested to not use robots.txt for hiding sensitive pages

- Create a dedicated and customised robots.txt file for each and every sub-domain that belongs to your root domain.

- Before going live, be triply sure that you are not blocking anything that you don’t wish to.

- Always test and validate your robots.txt file using Google’s robots.txt testing tool to find any errors and check if your directives are actually working.

- Googlebot won’t follow any links on pages blocked through robots.txt. Hence, ensure that the important links present on blocked pages are linked to other pages of your website as well.

- While setting up robots.txt file, do keep in that the blocked pages won’t pass any link equity to the pages they are linking to.

- Do not link pages blocked in robots.txt file from any other pages of your website. If linked, Google will end up crawling those pages through internal links.

- Ensure your robots.txt file is properly formatted.

- Each directive should be defined on a new line

- Maintain the case-sensitivity of the URL(s) when allowing or disallowing

- Do not use any other special characters except * and $

- Use # to add comments for more clarity. Crawlers do not consider lines with the # character

- Types of pages you should hide using robots.txt file

- Pagination pages

- Query parameter variations of a page

- Account or profile pages

- Admin pages

- Shopping cart

- Thank you pages

- Use robots.txt to block pages that are not linked from anywhere and are not indexed.

- Webmasters often make mistakes while setting up robots.txt. These are covered in a different article. Check it out and make sure you avoid them – Common robots.txt mistakes

-

Robots Tags

A robots.txt file will instruct the crawler only about which areas of the website it can crawl. However, it won’t tell the crawler whether it can index or not. To help with this, you can use robots tags to guide crawlers about indexing and many other functions. There are two types of robots tags – Meta Robots tag and X-robots tag.

Meta Robots Tag

A Meta Robots tag is an HTML code snippet that instructs search engines on how to crawl or index a certain page. It’s placed in the <head> section of a web page. This is how a Meta Robots tag looks:

<meta name=”robots” content=”noindex,nofollow”

The Meta Robots tag has two attributes – name and content

-

Name attribute

For the name attribute, the values defined are names of the robots, i.e. (Googlebot, MSNbot, etc.). You can simply define the value as robots as demonstrated in the example above, which means the directive will be applicable for all types of crawling robots.

-

Content Attribute

There are various types of values that can be defined in the content field. The content attribute indicates to crawlers how they should crawl and index information on the page. If there is no robots meta tag available, crawlers interpret it as an index and follow by default.

Here are the different types of values for the content attribute

-

-

- all – This directive instructs crawlers that there are no limitations on crawling and indexing. This acts the same as index, follow directives.

- index – Index directive denotes that crawlers are allowed to index the page. This is considered by default. You don’t need to add this to a page necessarily to get it indexed.

- noindex – Instructs crawlers not to index the page. If the page was already indexed, then this directive will instruct the crawler to remove the page from the index.

- follow – Tells search engines to follow all the links on a page and also pass link equity.

- nofollow – Restricts search engines from following links on a page and also passing any equity.

- none – This works the same as noindex, nofollow directives.

- noarchive – Does not show the cached copy of a page in the Search Engine Results Page (SERP).

- nocache – This directive is the same as noarchive but used by Internet Explorer and Firefox only.

- nosnippet – Does not show the extended description (i.e. meta description) of the page in the search results.

- notranslate – Does not allow Google to offer translation of the page in SERP.

- noimageindex – Blocks Googlebot from indexing any images present on the page.

- unavailable_after – Do not show this page in the search results after the defined date/time. Consider it as a noindex tag with a timer.

- max-snippet: – This directive helps you define the maximum number of characters Google should display in SERP for a page. In the example below, the number of characters will be limited to 150.

- Eg – <meta name=”robots” content=”max-snippet:150″/>

- max-video-preview – This will set up a maximum number of seconds for a video snippet preview. In the example below, Google will show a preview of 10 seconds – <meta name=”robots” content=”max-video-preview:10″ />

- max-image-preview – This tells Google how large the image it displays for a page on SERP should be. There are three possible values –

- None – No image snippet will be shown

- standard – Default image preview will be used

- large – Largest possible preview may be displayed

-

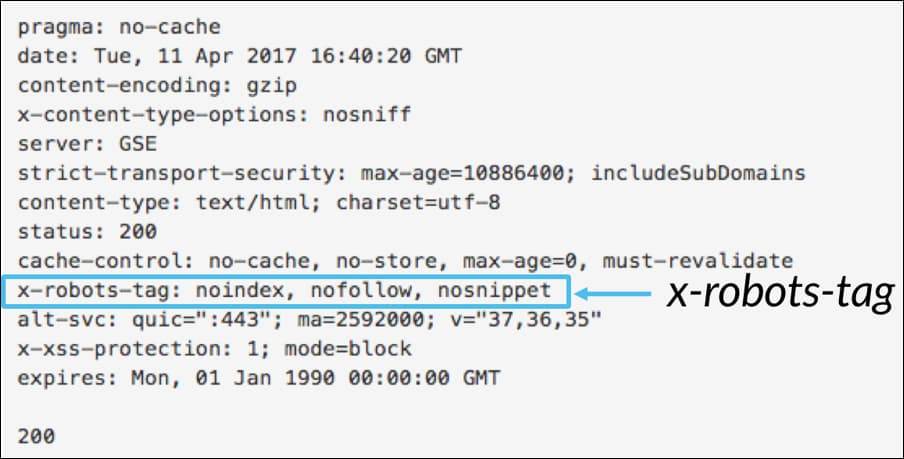

X Robots Tag

The Meta Robots tag can control crawling and indexing only at the page level. While the X-robots tag is similar to Meta Robots tag, the only difference is that the X-robots tag is defined in the HTTP header of a page to control crawling and indexing of either a complete page or specific elements of it. It is mainly used for controlling, crawling and indexing of non-HTML pages.

Example of X-Robots tag

As seen in this screenshot, the X-robots tag uses the same set of directives as the Meta Robots tag. In order to implement X-robots tag, you need to have access to .htaccess, .php or server configuration file to tweak the headers.

Best SEO Practices For Robots Tags

-

- 1) Do not use Meta Robots and x-robots on the same page as one of them will become redundant.

- 2) In case you don’t want to get the pages indexed but want to pass on the link equity to linked pages, you can use Meta Robots tag with directives as noindex, follow. This is the best technique to control indexing instead of blocking using robots.txt.

- 3) You don’t need to add index or follow directives on each page of your website to get it indexed. It is considered by default.

- 4) In case your pages are indexed, do not block in them in robots.txt and use Meta Robots simultaneously. Because, in order to consider the Meta Robots tag, crawlers need to crawl the page and robots.txt blocking won’t allow them to do so. In short, your Meta Robots tag will become redundant.

- In such cases, deploy the robots meta tag first on your pages and wait for Google to de-index them. Once de-indexed, you can block them through robots.txt to save your crawl budget. However, this should be avoided as they can be used to pass link equity to your important pages. You should block de-indexed pages via robots.txt only if they serve no purpose at all.

- 5) Use X-robots tag to control crawling of non-HTML files like images, PDF’s, flash or video, and so on.

Conclusion

Robots.txt and robots tags are essential in controlling the crawling and indexing of your website. There are a number of ways to control how crawlers access your website. However, not all of them will solve your issue. For example, if you want to remove some pages from the index, simply blocking them in robots.txt file won’t help.

The main key here is to learn what your website needs and then smartly choose a solution to deal with it when blocking pages. We hope this guide helps you find the best solution for you.

What method do you use for blocking pages? Let us know in the comments section below.

Popular Searches

How useful was this post?

0 / 5. 0