High-quality SEO will take you far in boosting your rankings, but technical SEO, especially Googlebot optimization goes a bit deeper.

Optimizing your website for Googlebot is an imperative task. Here are some tips and tricks that can help you achieve optimal Googlebot optimization.

What is Googlebot?

The concept of web crawling is not alien to us. It is also why the Internet is referred to as the ‘World Wide Web’. That said, Googlebot is the generic name for Google’s web crawler that works as a stimulant for either desktop or mobile phone users. It is simply a code that crawls web pages and adds them to the search index of Google SERPs.

What is crawlability?

In layman terms, crawlability refers to how accessible your site is to Googlebots. Granting access to Google crawlers to pick up parameters allows users to find your website because these bots allow your pages to be indexed for Google searches.

Crawlability falls under technical SEO, and when the SEObots arrive on your website you need to ensure they easily find the information they are looking for. That’s why it’s crucial to have your site analyzed by a top-notch seo company like Infidigit so that your Google rankings remain positive.

Unlock higher rankings, quality traffic, and increased conversions through tailored award-winning SEO strategies.

Elevate your web presence by Infidigit’s SEO solutions.

Unlock higher rankings, quality traffic, and increased conversions through tailored award-winning SEO strategies.

How does Googlebot Work?

To understand the nuances of how a webpage ranks, it is important to know how does Google crawler work. Googlebot makes use of databases and sitemaps of the various links it discovered in its prior crawling to chart out where to crawl next on the web. Whenever Googlebot finds new links while crawling a website, it automatically adds those links to its list of webpages it is going to visit next.

Additionally, if the Googlebot finds that there are changes made to broken links or other links, it makes a note to update the same on the Google index. Hence, you must always ensure that your webpages are crawlable, so they can be properly indexed by Googlebot.

Different types of Googlebots

Google has many different types of Google crawlers, and each one is designed for a multitude of forms in which crawling and rendering of websites happen. As a website owner, you would rarely have to set up your website with different directives of each type of crawling bots. They are all treated the same way in the world of SEO unless specific directives or meta-commands are set up by your website for particular bots.

There are a total of 17 types of Googlebots:

- APIs-Google

- AdSense

- AdsBot Mobile Web Android

- AdsBot Mobile Web

- Googlebot Image

- Googlebot News

- Googlebot Video

- Googlebot Desktop

- Googlebot Smartphone

- Mobile Apps Android

- Mobile AdSense

- Feedfetcher

- Google Read Aloud

- Duplex on the web

- Google Favicon

- Web Light

- Google StoreBot

How to control Googlebot?

Manipulating the Googlebot to only crawl the parts of your website you wish are crawled is not only a wise idea for your business website but also for your target audience. This will further enhance your brand credibility and ensure the readers arrive at the right pages on your site. Some of the most popular ways to control Googlebot are robot.txt file, changing the crawl rate and applying a ‘nofollow’ in your HTML code.

Ways to control crawling

As we mentioned in the previous section, there are quite a few ways to manipulate Googlebot in order to optimise your website for SERPs. These ways to control crawling include

- Robots.txt: A robots.txt file is used by search engine crawlers whose URLs are accessible on your site. This is used mainly to avoid overloading your site with requests. Another reason why this can help control Googlebot activity is if you wish for certain unimportant or similar webpages from not show up in search results.

- Nofollow or Disallow Element: This is an element or link that prevents spam links from diverting traffic away from your web pages. That said, a nofollow won’t prevent the linked page from being crawled completely; it just prevents it from being crawled through that specific link.

- Change Your Crawl Rate: The term crawl rate means how many requests per second Googlebot makes to your site when it is crawling it: for example, 5 requests per second. You cannot change how often Google crawls your site, but you can always request a recrawl.

Ways to Control Indexing

- Delete your content

Poorly performing content can have a negative impact on the overall performance of your business website. This is so because Google evaluates low-quality content on a page-by-page basis but does aggregate all of the indexed pages when they evaluate a website for quality. Hence it is advised to delete and/or modify certain content pages.

- Restrict access to the content

Oftentimes, when your website ranks for specific queries on SERPs inappropriate information may appear in the result list. To avoid exposing data not intended for your target audience, certain content pages can be locked or restricted for view. Some of the ways of doing this are archiving content, creating specialized content, or restricting the indexing of large datasets.

- Noindex

If you wish to prevent your page from being indexed on Google SERPs, “noindex” is a rule set with either a <meta> tag or HTTP response header. When Googlebot crawls that page, it drops it entirely from Search results, regardless of other sites linked to it.

<!DOCTYPE html>

<html><head>

<meta name=”robots” content=”noindex”>

……</head>

<body>……..

….</body>

</html>

- URL removal tool

This is a tool that prevents pages from appearing in the search results. Contrary to how Noindex works, the tool doesn’t remove your URL from the Google Index but can temporarily hide it from users.

How do you optimize your website for Googlebot?

Before SEO, you must optimize your website for Googlebots to ensure optimum ranking in SERPs. To ensure that your website is accurately and easily indexed by Google, follow these tips:

-

Correct Robots.txt

Robots.txt serves the purpose of being a directive for Googlebots. It helps Googlebot understand where to spend the crawl budget it has. This means that you can decide which pages on your website Googlebots can crawl and which ones they cannot.

The default mode of Googlebots is to crawl and index all things it comes across. Hence, you must be very careful about what pages or sections of your website you are blocking. Robots.txt tells Googlebots where to not go, so you must correct it throughout your website to let the Google crawler index the relevant parts of your website.

-

Use internal links

It is very helpful when you get a map of an area you’re visiting for the first time. Internal links act in the same essence for Googlebots. As the bots crawl your website, internal links help them in navigating through the various pages and crawl them comprehensively. The more tightly knitted and integrated your website’s internal linking is, the better Googlebots will be able to crawl your website.

You can use tools like Google Search Console to analyze how well integrated your website’s internal linking structure is.

-

Use Sitemap.xml

The sitemap of a website is a very clear guide for Googlebots on how to access your website. Sitemap.xml serves as a map to your website for following Googlebot crawlers. Googlebots might get confused by the complicated website architecture and lose track while crawling your website. Sitemap.xml helps them in avoiding missteps and ensures that the bots are able to crawl through all the relevant areas of your website.

-

Check Canonicals

One of the most common problems for large websites, especially in the e-commerce domain, is handling duplicate pages. Duplicate pages can exist for many practical reasons, such as multilingual pages. However, they might create a problem for being properly indexed by Googlebots if not handled carefully.

If you have duplicate pages on your website for any reason, it is imperative that you identify those pages for Googlebots using canonical tags to ensure that the Googlebot knows their attribute. You can also use the hreflang attribute for the same.

-

Site speed

The loading speed of your website is a very important element that you should optimize since it’s one of the highest-ranking factors by Google. Googlebot assesses how long it takes for your website to load, and if it takes longer, there’s a high possibility that Googlebot will lower your rankings.

-

Clean URL Structure

Having a clean and precise URL structure is also a ranking factor and also helps in improving the user experience. Hence, you must ensure that your URL taxonomy throughout the website is defined and clean.

Googlebots are able to understand the interconnection of each page better with clean URL structures. This is an activity that must start right from the beginning of your website development.

If you have old pages which are ranking high, it is recommended that you don’t change their URL. However, if you believe it will help your website, you must make sure to set up 301 redirects for those pages. Also update your sitemap.xml to let Googlebot know of this change so it can update the same on the index.

-

Content

The quality of content on your website can be crucial in determining how well it ranks on Google. The advanced algorithms used by Googlebot also assess the quality of the content while your pages are crawled. Hence, you must ensure that your content is high-quality, SEO optimized, and can improve your domain authority.

-

Image optimization

Image optimization can significantly help Googlebots in determining how the images are related to the content, and boost the SEO healthiness of your content.

Here are some ways in which you can carry out image optimization on your website:

- Image file name – What the image is, described in as few words as possible

- Image alt text – Describe what the image is in more words, and possibly use keywords to enhance its SEO

- Image Sitemap – It is recommended by Google to use a separate sitemap for images so that Googlebot can more easily crawl through your images.

-

Create simple and fresh content

Google is very user-focused in its ranking factors. Making the content on your website easily accessible, easy to understand, and fresh are the three pillars that can significantly optimize your website for Googlebots.

Google’s advanced algorithms help Googlebot in analyzing and determining if your content is fresh, easy to understand, and how well it is engaging with the users.

-

Control Crawling

Always check if your website’s URLs are within Google’s parameters and that you do not rely heavily on duplicate content. This will ensure that Googlebots crawls your site in the right manner.

-

Control Indexing

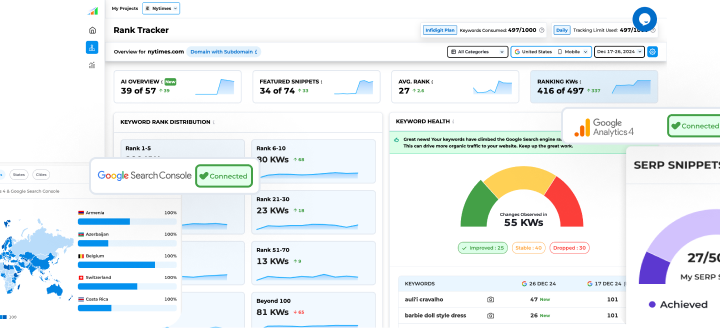

First, you need to submit your sitemap to the Google Search Console. This gives you a better chance of turning up in SERPs when a user conducts a search. Indexing is a vital component of crawling bots’ agenda, and important sites of your site should be accessible.

-

Optimize infinite scrolling pages

The goal of each website is to make you spend more time on the platform, and consume more information so that the bounce rate would decrease. This is a tactic used when optimizing websites referred to as infinite scrolling. Infinite scrolling allows viewers to see content in the form of chunks and once the scroll reaches the bottom of the page, more content shows up. This way readers can stay longer on the page which increases the chances to get clicks and gain ad revenue.

All in all, you need to keep a tab on how well Googlebots can crawl and index your website.

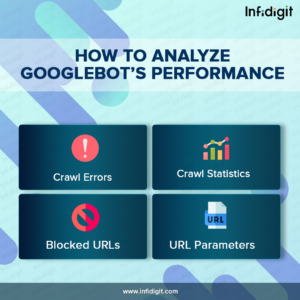

How to analyze Googlebot’s performance

Analyzing Googlebot’s performance is quite easy and accessible compared to other engines’ SEO bots. You can use platforms like Google Webmaster Tools to obtain valuable insights about how Googlebots are performing on your website.

All you need to do is log in to Google Webmaster, and click on “Crawl” to check all the relevant diagnostics data. Here are the features of the Webmasters Tool that you must regularly check and analyze:

-

Crawl Errors

Webmaster can point out if your website is facing any crawling issue from Googlebot. As the Googlebot crawls your website, the tool will point out if it is showing any red flags in crawling or not. These red flags can include crawling errors like pages that the Googlebot expected to be present on your website based on its last indexing, and are not there.

While some sites have few crawl errors that might seem insignificant, they can grow over time and be correlated with a steady decline of traffic on your website. The most common crawl errors identified by Webmasters are URL Errors and Site Errors.

-

Crawl Statistics

Webmasters Tool also lets the users know how many kbs and pages are being analyzed by Googlebot per day. If you are running a content marketing campaign, it means that you are regularly pushing out new content for Googlebot to crawl, which will lead to an upward tick on the stats. This means that your new pages are being properly crawled and indexed, and you can keep a check on the ones which might be facing crawl errors and resolve them.

-

Fetch as Google

This feature allows the users to look or “crawl” the website in a similar fashion as Googlebots would. This helps you in analyzing individual pages and finding the scope for improvement to boost the SEO.

-

Blocked URLs

It is always advised to keep a regular check on all the blocked URLs on your website. You must check if your robots.txt files are working the way they are supposed to. The blocked URLs feature will let you know the attributes of each page using robots.txt.

-

URL Parameters

You might face some issues based on how much duplicate content your website has, either due to dynamic URLs or some other reason. “URL Parameters” is a feature that lets you configure how Googlebot crawls your website and indexes it based on various URL parameters. Googlebot crawls the pages based on how Google decides by default. This feature allows you to set up the URL parameters and improve how Google indexes your website to avoid any setbacks from duplicate pages.

How Googlebot will “crawl” your site every day?

Googlebot recrawls pages based on what was crawled previously. Thus when a crawler tiptoes on a page, it tries to pick up links to further crawl other pages. To get a brief about what that page is about, Google will render it to analyze its overall layout and the text written. As Google uses mobile-first indexing, it will first look through the mobile version of a website before beginning to analyze it.

Optimize your Website for Googlebots Today

Now that you know how to use Googlebot to your advantage, it is time to put in the work and ensure that your website is thoroughly indexed by Google. Taking the help of SEO professionals like Infidigit can be quite helpful in this complicated process. With years of experience in providing holistic SEO services, Infidigit can help your website in achieving maximum potential, and help you in navigating the world of Googlebot. Contact us today to learn more.

Popular Searches

How useful was this post?

5 / 5. 5

2 thoughts on “Boosting Your Technical SEO with Googlebot – All You Need to Know”

Nice Post!

We are glad that you liked our post. Check out our latest posts for more updates.